前提:Raspberry Pi2にUSB Sound Deviceを接続し、音声ストリーミングを配信

Raspberry Pi2にて検証を行いましたが、基本的にはRaspberry Pi3/4でも同じ手順で動作すると思います。

lsusbにてデバイスの認識状況を確認。

「C-Media Electronics, Inc. CM108 Audio Controller」として認識。

$ lsusb

Bus 001 Device 002: ID 0424:9514 Standard Microsystems Corp.

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 001 Device 003: ID 0424:ec00 Standard Microsystems Corp.

Bus 001 Device 004: ID 0d8c:013c C-Media Electronics, Inc. CM108 Audio Controller

Bus 001 Device 005: ID 0bda:2838 Realtek Semiconductor Corp. RTL2838 DVB-T

# 音声デバイスの優先度は以下のコマンドで確認ができます。

$ cat /proc/asound/modules

0 snd_usb_audio

1 snd_bcm2835

参考にさせていただきました。ありがとうございます。

FFserver導入(release/2.8が一番安定)

FFserverは最新のffmpegにてサポート外となったので、FFserver対応のreleaseを git から取得(release/2.8 release/3.4はうまく動作せず)

# git "-b バージョン"にて、特定のバージョンをclone

git clone git://source.ffmpeg.org/ffmpeg.git -b release/2.8

Cloning into 'ffmpeg'...

remote: Enumerating objects: 614118, done.

remote: Counting objects: 100% (614118/614118), done.

remote: Compressing objects: 100% (124386/124386), done.

remote: Total 614118 (delta 493889), reused 607415 (delta 488483)

Receiving objects: 100% (614118/614118), 120.29 MiB | 336.00 KiB/s, done.

Resolving deltas: 100% (493889/493889), done.

Checking out files: 100% (6584/6584), done.

# makeには時間がかかるため、screenを起動 @2021/01/20 11:40

screen -R FFSERVER

cd ./ffmpeg

./configure

make && sudo make install

# 数時間後に、ffserverが作成されるので、confファイルを/etcにcopy

sudo cp ./ffserver.conf /etc/

cat /etc/ffserver.conf

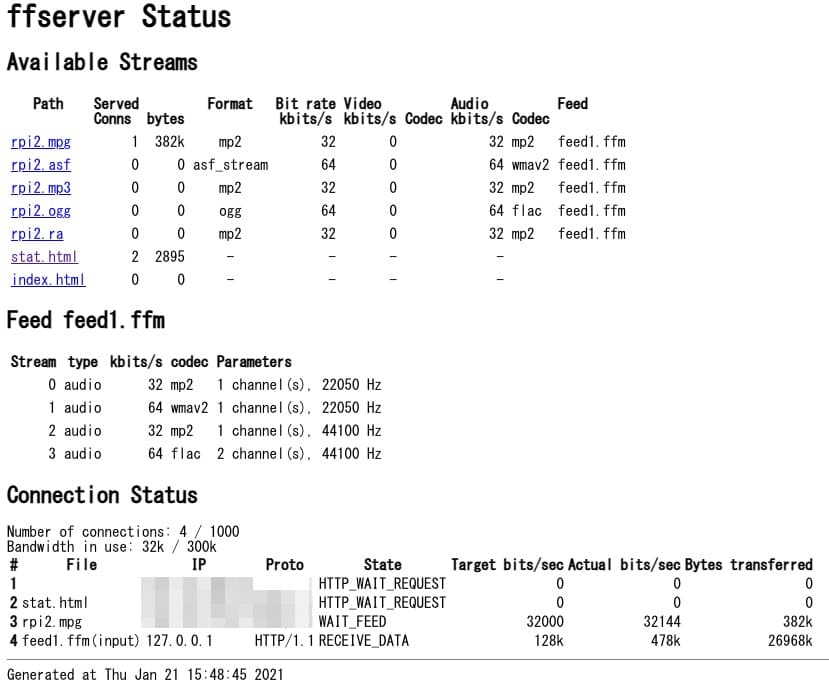

http://server-ip:8090/stat.html にてステータス表示

cat ./ffserver.conf

# Port on which the server is listening. You must select a different

# port from your standard HTTP web server if it is running on the same

# computer.

Port 8090

# Address on which the server is bound. Only useful if you have

# several network interfaces.

BindAddress 0.0.0.0

# Number of simultaneous HTTP connections that can be handled. It has

# to be defined *before* the MaxClients parameter, since it defines the

# MaxClients maximum limit.

MaxHTTPConnections 2000

# Number of simultaneous requests that can be handled. Since FFServer

# is very fast, it is more likely that you will want to leave this high

# and use MaxBandwidth, below.

MaxClients 1000

# This the maximum amount of kbit/sec that you are prepared to

# consume when streaming to clients.

MaxBandwidth 300

#MaxBandwidth 1000

#MaxBandwidth 50

# Access log file (uses standard Apache log file format)

# '-' is the standard output.

#CustomLog -

CustomLog /var/log/ffserver.log

# Suppress that if you want to launch ffserver as a daemon.

NoDaemon

##################################################################

# Definition of the live feeds. Each live feed contains one video

# and/or audio sequence coming from an ffmpeg encoder or another

# ffserver. This sequence may be encoded simultaneously with several

# codecs at several resolutions.

<Feed feed1.ffm>

# You must use 'ffmpeg' to send a live feed to ffserver. In this

# example, you can type:

#

# ffmpeg http://localhost:8090/feed1.ffm

# ffserver can also do time shifting. It means that it can stream any

# previously recorded live stream. The request should contain:

# "http://xxxx?date=[YYYY-MM-DDT][[HH:]MM:]SS[.m...]".You must specify

# a path where the feed is stored on disk. You also specify the

# maximum size of the feed, where zero means unlimited. Default:

# File=/tmp/feed_name.ffm FileMaxSize=5M

File /tmp/feed1.ffm

#FileMaxSize 200K

FileMaxSize 10000K

# You could specify

# ReadOnlyFile /saved/specialvideo.ffm

# This marks the file as readonly and it will not be deleted or updated.

# Specify launch in order to start ffmpeg automatically.

# First ffmpeg must be defined with an appropriate path if needed,

# after that options can follow, but avoid adding the http:// field

#Launch ffmpeg

# Only allow connections from localhost to the feed.

ACL allow 127.0.0.1

</Feed>

##################################################################

# Now you can define each stream which will be generated from the

# original audio and video stream. Each format has a filename (here

# 'test1.mpg'). FFServer will send this stream when answering a

# request containing this filename.

<Stream rpi2.mpg>

# coming from live feed 'feed1'

Feed feed1.ffm

# Format of the stream : you can choose among:

# mpeg : MPEG-1 multiplexed video and audio

# mpegvideo : only MPEG-1 video

# mp2 : MPEG-2 audio (use AudioCodec to select layer 2 and 3 codec)

# ogg : Ogg format (Vorbis audio codec)

# rm : RealNetworks-compatible stream. Multiplexed audio and video.

# ra : RealNetworks-compatible stream. Audio only.

# mpjpeg : Multipart JPEG (works with Netscape without any plugin)

# jpeg : Generate a single JPEG image.

# asf : ASF compatible streaming (Windows Media Player format).

# swf : Macromedia Flash compatible stream

# avi : AVI format (MPEG-4 video, MPEG audio sound)

#Format mpeg

Format mp2

#Format ogg -> NG

#Format swf -> NG

# Bitrate for the audio stream. Codecs usually support only a few

# different bitrates.

AudioBitRate 32

#AudioBitRate 16

# Number of audio channels: 1 = mono, 2 = stereo

AudioChannels 1

# Sampling frequency for audio. When using low bitrates, you should

# lower this frequency to 22050 or 11025. The supported frequencies

# depend on the selected audio codec.

#AudioSampleRate 44100

AudioSampleRate 22050

# Bitrate for the video stream

#VideoBitRate 64

# Ratecontrol buffer size

#VideoBufferSize 40

# Number of frames per second

#VideoFrameRate 3

# Size of the video frame: WxH (default: 160x128)

# The following abbreviations are defined: sqcif, qcif, cif, 4cif, qqvga,

# qvga, vga, svga, xga, uxga, qxga, sxga, qsxga, hsxga, wvga, wxga, wsxga,

# wuxga, woxga, wqsxga, wquxga, whsxga, whuxga, cga, ega, hd480, hd720,

# hd1080

#VideoSize 160x128

# Transmit only intra frames (useful for low bitrates, but kills frame rate).

#VideoIntraOnly

# If non-intra only, an intra frame is transmitted every VideoGopSize

# frames. Video synchronization can only begin at an intra frame.

#VideoGopSize 12

# More MPEG-4 parameters

# VideoHighQuality

# Video4MotionVector

# Choose your codecs:

AudioCodec mp2

#VideoCodec mpeg1video

# Suppress audio

#NoAudio

# Suppress video

NoVideo

#VideoQMin 3

#VideoQMax 31

# Set this to the number of seconds backwards in time to start. Note that

# most players will buffer 5-10 seconds of video, and also you need to allow

# for a keyframe to appear in the data stream.

Preroll 30

# ACL:

# You can allow ranges of addresses (or single addresses)

#ACL ALLOW <first address>

# You can deny ranges of addresses (or single addresses)

#ACL DENY <first address>

# You can repeat the ACL allow/deny as often as you like. It is on a per

# stream basis. The first match defines the action. If there are no matches,

# then the default is the inverse of the last ACL statement.

#

# Thus 'ACL allow localhost' only allows access from localhost.

# 'ACL deny 1.0.0.0 1.255.255.255' would deny the whole of network 1 and

# allow everybody else.

</Stream>

##################################################################

# Example streams

# Multipart JPEG

#<Stream test.mjpg>

#Feed feed1.ffm

#Format mpjpeg

#VideoFrameRate 2

#VideoIntraOnly

#NoAudio

#Strict -1

#</Stream>

# Single JPEG

#<Stream test.jpg>

#Feed feed1.ffm

#Format jpeg

#VideoFrameRate 2

#VideoIntraOnly

##VideoSize 352x240

#NoAudio

#Strict -1

#</Stream>

# Flash -> NG

#<Stream live.swf>

#Feed feed1.ffm

#Format swf

#VideoFrameRate 2

#VideoIntraOnly

#NoAudio

#NoVideo

#</Stream>

# ASF compatible

<Stream rpi2.asf>

Feed feed1.ffm

Format asf

VideoFrameRate 15

VideoSize 352x240

VideoBitRate 256

VideoBufferSize 40

VideoGopSize 30

AudioBitRate 64

StartSendOnKey

</Stream>

# MP3 audio

<Stream rpi2.mp3>

Feed feed1.ffm

Format mp2

AudioCodec mp2

#AudioBitRate 64

AudioBitRate 32

#AudioBitRate 16

AudioChannels 1

AudioSampleRate 44100

#AudioSampleRate 22050

PreRoll 500

NoVideo

</Stream>

# Ogg Vorbis audio

<Stream rpi2.ogg>

Feed feed1.ffm

Title "Stream title"

AudioBitRate 64

AudioChannels 2

AudioSampleRate 44100

NoVideo

</Stream>

# Real with audio only at 32 kbits

<Stream rpi2.ra>

Feed feed1.ffm

#Format rm

Format mp2

AudioBitRate 32

NoVideo

#PreRoll 50

PreRoll 5000

#NoAudio

</Stream>

# Real with audio and video at 64 kbits

#<Stream test.rm>

#Feed feed1.ffm

#Format rm

#AudioBitRate 32

#VideoBitRate 128

#VideoFrameRate 25

#VideoGopSize 25

#NoAudio

#</Stream>

##################################################################

# A stream coming from a file: you only need to set the input

# filename and optionally a new format. Supported conversions:

# AVI -> ASF

#<Stream file.rm>

#File "/usr/local/httpd/htdocs/tlive.rm"

#NoAudio

#</Stream>

#<Stream file.asf>

#File "/usr/local/httpd/htdocs/test.asf"

#NoAudio

#Author "Me"

#Copyright "Super MegaCorp"

#Title "Test stream from disk"

#Comment "Test comment"

#</Stream>

##################################################################

# RTSP examples

#

# You can access this stream with the RTSP URL:

# rtsp://localhost:5454/test1-rtsp.mpg

#

# A non-standard RTSP redirector is also created. Its URL is:

# http://localhost:8090/test1-rtsp.rtsp

#<Stream test1-rtsp.mpg>

#Format rtp

#File "/usr/local/httpd/htdocs/test1.mpg"

#</Stream>

# Transcode an incoming live feed to another live feed,

# using libx264 and video presets

#<Stream live.h264>

#Format rtp

#Feed feed1.ffm

#VideoCodec libx264

#VideoFrameRate 24

#VideoBitRate 100

#VideoSize 480x272

#AVPresetVideo default

#AVPresetVideo baseline

#AVOptionVideo flags +global_header

#

#AudioCodec libfaac

#AudioBitRate 32

#AudioChannels 2

#AudioSampleRate 22050

#AVOptionAudio flags +global_header

#</Stream>

##################################################################

# SDP/multicast examples

#

# If you want to send your stream in multicast, you must set the

# multicast address with MulticastAddress. The port and the TTL can

# also be set.

#

# An SDP file is automatically generated by ffserver by adding the

# 'sdp' extension to the stream name (here

# http://localhost:8090/test1-sdp.sdp). You should usually give this

# file to your player to play the stream.

#

# The 'NoLoop' option can be used to avoid looping when the stream is

# terminated.

#<Stream test1-sdp.mpg>

#Format rtp

#File "/usr/local/httpd/htdocs/test1.mpg"

#MulticastAddress 224.124.0.1

#MulticastPort 5000

#MulticastTTL 16

#NoLoop

#</Stream>

##################################################################

# Special streams

# Server status

<Stream stat.html>

Format status

# Only allow local people to get the status

ACL allow localhost

ACL allow 192.168.0.0 192.168.255.255

#FaviconURL http://pond1.gladstonefamily.net:8080/favicon.ico

</Stream>

# Redirect index.html to the appropriate site

<Redirect index.html>

URL http://www.ffmpeg.org/

</Redirect>FFServer実行

/usr/local/bin/ffserver -f /etc/ffserver/ffserver.conf >> /tmp/FFserver_streaming.logFFmpeg実行1 → NG alsaデバイスの不足

Starting ffmpeg

ffmpeg version n3.4.8-5-g8f5e16b5f1 Copyright (c) 2000-2020 the FFmpeg developers

built with gcc 8 (Raspbian 8.3.0-6+rpi1)

configuration:

libavutil 55. 78.100 / 55. 78.100

libavcodec 57.107.100 / 57.107.100

libavformat 57. 83.100 / 57. 83.100

libavdevice 57. 10.100 / 57. 10.100

libavfilter 6.107.100 / 6.107.100

libswscale 4. 8.100 / 4. 8.100

libswresample 2. 9.100 / 2. 9.100

Unknown input format: 'alsa'不足ライブラリインストール(alsa)

sudo apt-get install libasound2-dev

cd ~/ffmpeg

./configure

... alsa! ..

make

./ffmpeg -formats |grep alsa

... DE alsa! .. (the D/E columns indicate both directions are working

sudo make install参考にしたページ

FFmpeg実行2回目 → NG USB Sound Deviceの指定間違い

Starting ffmpeg

ffmpeg version n2.8.17 Copyright (c) 2000-2020 the FFmpeg developers

built with gcc 8 (Raspbian 8.3.0-6+rpi1)

configuration:

libavutil 55. 78.100 / 55. 78.100

libavcodec 57.107.100 / 57.107.100

libavformat 57. 83.100 / 57. 83.100

libavdevice 57. 10.100 / 57. 10.100

libavfilter 6.107.100 / 6.107.100

libswscale 4. 8.100 / 4. 8.100

libswresample 2. 9.100 / 2. 9.100

[alsa @ 0x2a32390] cannot open audio device hw:0,0 (No such file or directory)

hw:0,0: Input/output errorUSB Sound Deviceの確認

書式:hw:card番号,device番号

「Card 1, device 0」 として認識されているので「hw:1,0」がSound Device

sudo aplay -l

**** List of PLAYBACK Hardware Devices ****

card 0: Headphones [bcm2835 Headphones], device 0: bcm2835 Headphones [bcm2835 Headphones]

Subdevices: 8/8

Subdevice #0: subdevice #0

Subdevice #1: subdevice #1

Subdevice #2: subdevice #2

Subdevice #3: subdevice #3

Subdevice #4: subdevice #4

Subdevice #5: subdevice #5

Subdevice #6: subdevice #6

Subdevice #7: subdevice #7

card 1: Set [C-Media USB Headphone Set], device 0: USB Audio [USB Audio]

Subdevices: 1/1

Subdevice #0: subdevice #0FFmpeg実行3回目 -> OK!

# FFmpeg実行

sudo /usr/local/bin/ffmpeg -ac 1 -f alsa -ar 4000 -i "hw:1,0" -acodec mp2 -ab 32k -ac 1 http://localhost:8090/feed1.ffm

ffmpeg version n2.8.17 Copyright (c) 2000-2020 the FFmpeg developers

built with gcc 8 (Raspbian 8.3.0-6+rpi1)

configuration:

libavutil 54. 31.100 / 54. 31.100

libavcodec 56. 60.100 / 56. 60.100

libavformat 56. 40.101 / 56. 40.101

libavdevice 56. 4.100 / 56. 4.100

libavfilter 5. 40.101 / 5. 40.101

libswscale 3. 1.101 / 3. 1.101

libswresample 1. 2.101 / 1. 2.101

Guessed Channel Layout for Input Stream #0.0 : mono

Input #0, alsa, from 'hw:1,0':

Duration: N/A, start: 1611211271.855956, bitrate: 705 kb/s

Stream #0:0: Audio: pcm_s16le, 44100 Hz, 1 channels, s16, 705 kb/s

Output #0, ffm, to 'http://127.0.0.1:8090/feed1.ffm':

Metadata:

creation_time : 2021-01-21 15:41:11

encoder : Lavf56.40.101

Stream #0:0: Audio: mp2, 22050 Hz, mono, s16, 32 kb/s

Metadata:

encoder : Lavc56.60.100 mp2

Stream #0:1: Audio: wmav2, 22050 Hz, mono, fltp, 64 kb/s

Metadata:

encoder : Lavc56.60.100 wmav2

Stream #0:2: Audio: mp2, 44100 Hz, mono, s16, 32 kb/s

Metadata:

encoder : Lavc56.60.100 mp2

Stream #0:3: Audio: flac, 44100 Hz, stereo, s16, 64 kb/s

Metadata:

encoder : Lavc56.60.100 flac

Stream mapping:

Stream #0:0 -> #0:0 (pcm_s16le (native) -> mp2 (native))

Stream #0:0 -> #0:1 (pcm_s16le (native) -> wmav2 (native))

Stream #0:0 -> #0:2 (pcm_s16le (native) -> mp2 (native))

Stream #0:0 -> #0:3 (pcm_s16le (native) -> flac (native))

Press [q] to stop, [?] for help

size= 984kB time=00:00:16.96 bitrate= 475.1kbits/s配信状況の確認 http://server-ip:8090/

この時点でVLCなどでアドレス「http://server-ip:8090/rpi2.mpg」にて音声配信を受信できます。

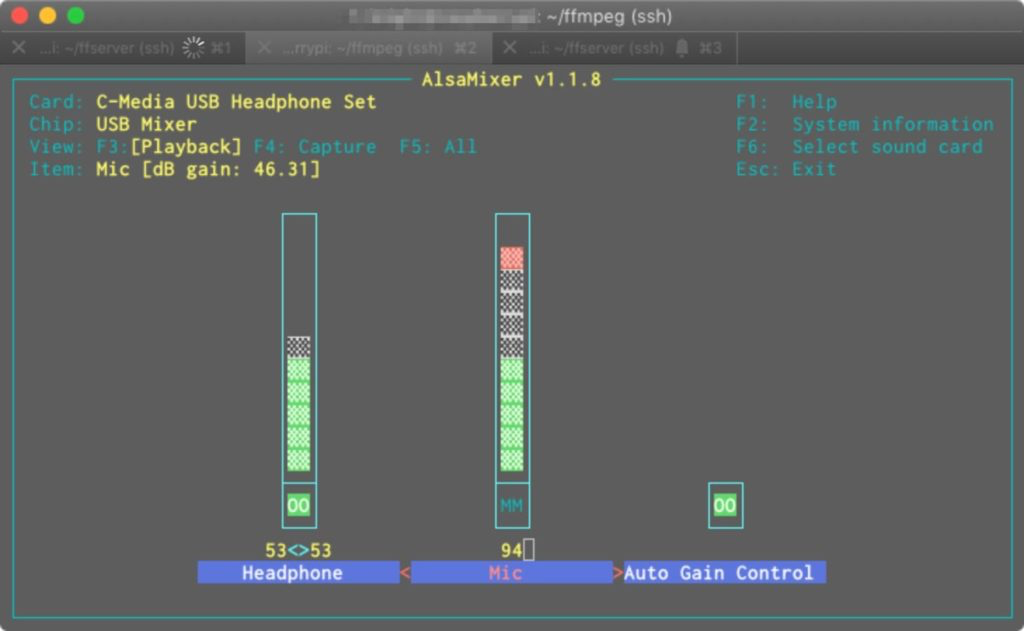

入力音量の調整 sudo alsamixer

F6でデバイスを選択。ESCで終了。

参考:FFserver_FFmpeg_start-daemon.sh

#!/bin/bash

#prgfile=<Program Script filepath>

# FFserver

prgfile="/usr/local/bin/ffserver -f /etc/ffserver.conf > /dev/null 2>&1"

prgfile="/usr/local/bin/ffserver -loglevel quiet -f /etc/ffserver.conf > /dev/null 2>&1"

curdir="/home/USER/ffserver/"

prgtitle=ffserver

#pidfile=<PID filepath>

pidfile=/tmp/$prgtitle.pid

# FFmpeg

# x201

prgfile2="/usr/local/bin/ffmpeg -ac 2 -f alsa -ar 16000 -i default -loglevel quiet -acodec mp2 -ab 64k -ac 1 http://127.0.0.1:8090/feed1.ffm "

# Raspberry Pi

prgfile2="sudo /usr/local/bin/ffmpeg -ac 1 -f alsa -ar 4000 -i "hw:1,0" -loglevel quiet -acodec mp2 -ab 32k -ac 1 http://localhost:8090/feed1.ffm "

prgtitle2=ffmpeg

pidfile2=/tmp/$prgtitle2.pid

start() {

if [ -f $pidfile ]; then

pid=`cat $pidfile`

kill -0 $pid >& /dev/null

if [ $? -eq 0 ]; then

echo "Daemon has started."

return 1

fi

fi

cd $curdir

# Starting FFserver

echo "Starting $prgfile"

$prgfile &

#pgrep -fl ffserver | awk '{print $1}' > $pidfile

pgrep -fl $prgtitle | awk '{print $1}' > $pidfile

# Starting FFmpeg

sleep 1

echo "Starting $prgfile2"

$prgfile2 > /tmp/ffmpeg.log &

#pgrep -fl ffserver | awk '{print $1}' > $pidfile2

#pgrep -fl ffmpeg | awk '{print $1}' > $pidfile2

pgrep -fl $prgtitle2 | awk '{print $1}' > $pidfile2

if [ $? -eq 0 ]; then

echo "Daemon started."

pid=`cat $pidfile`

pid2=`cat $pidfile2`

echo "Daemon $prgtitle is started. (PID: ${pid})"

echo "Daemon $prgtitle2 is started. (PID: ${pid2})"

return 0

else

echo "Failed to start daemon."

return 1

fi

}

stop() {

if [ ! -f $pidfile ]; then

echo "Daemon not started."

return 1

fi

pid=`cat $pidfile`

pid2=`cat $pidfile2`

kill $pid >& /dev/null

if [ $? -ne 0 ]; then

echo "Operation not permitted."

return 1

fi

echo -n "Stopping daemon..."

while true

do

kill -0 $pid >& /dev/null

kill -0 $pid2 >& /dev/null

if [ $? -ne 0 ]; then

break

fi

sleep 2

echo -n "."

done

rm -rf $pidfile

rm -rf $pidfile2

echo -e "\nDaemon stopped."

return 0

}

status() {

# FFserver

if [ -f $pidfile ]; then

pid=`cat $pidfile`

pid2=`cat $pidfile2`

kill -0 $pid >& /dev/null

if [ $? -eq 0 ]; then

echo "Daemon $prgtitle is running. (PID: ${pid})"

echo "Daemon $prgtitle2 is running. (PID: ${pid2})"

return 0

else

echo "Daemon might crash. (PID: ${pid} file remains)"

return 1

fi

else

echo "Daemon not started."

return 0

fi

}

restart() {

stop

if [ $? -ne 0 ]; then

return 1

fi

sleep 2

start

return $?

}

case "$1" in

start | stop | status | restart)

$1

;;

*)

echo "Usage: $0 {start|stop|status|restart}"

exit 2

esac

#exit $

exit 0